Title

Viewing-Distance Aware Super-Resolution for High-Definition Display

Authors

Chih-Tsung Shen, Graduate Institute of Networking and Multimedia, National Taiwan University, Taipei

Hung-Hsun Liu, Telecommunication Laboratories, Chunghwa Telecom Co., Taoyuan.

Ming-Hsuan Yang, Department of Electrical Engineering and Computer Science, University of California at Merced, Merced, CA 95344, USA.

Yi-Ping Hung, Graduate Institute of Networking and Multimedia, National Taiwan University, Taipei

Soo-Chang Pei, Department of Electrical Engineering, National Taiwan University, Taipei.

Abstract

In this paper, we propose a novel algorithm for

high-definition displays to enlarge low-resolution images while

maintaining perceptual constancy (i.e., the same field-of-view,

perceptual blur radius, and the retinal image size in viewerˇ¦s

eyes). We model the relationship between a viewer and a display

by considering two main aspects of visual perception, i.e., scaling

factor and perceptual blur radius. As long as we enlarge an image

while adjust its image blur levels on the display, we can maintain

viewerˇ¦s perceptual constancy. We show that the scaling factor

should be set in proportion to the viewing distance and the blur

levels on the display should be adjusted according to the focal

length of a viewer. Toward this, we first refer to edge directions to

interpolate a low-resolution image with the increasing of viewing

distance and the scaling factor. After images are interpolated,

we utilize local contrast to estimate the spatially-varying image

blur levels of the interpolated image. We then further adjust the

image blur levels by using a parametric deblurring method which

combines L1 as well as L2 reconstruction errors, and Tikhonov

with total variation regularization terms. By taking these factors

into account, high-resolution images adaptive to viewing distance

on a display can be generated. Experimental results on both

natural image metric and user subjective studies across image

scales demonstrate that the proposed super-resolution algorithm

for high-definition displays performs favorably against the stateof-

the-art methods.

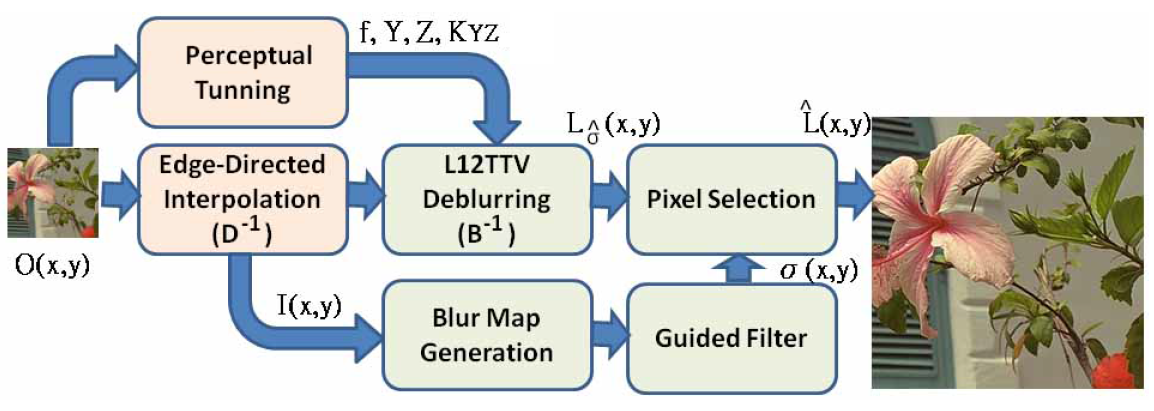

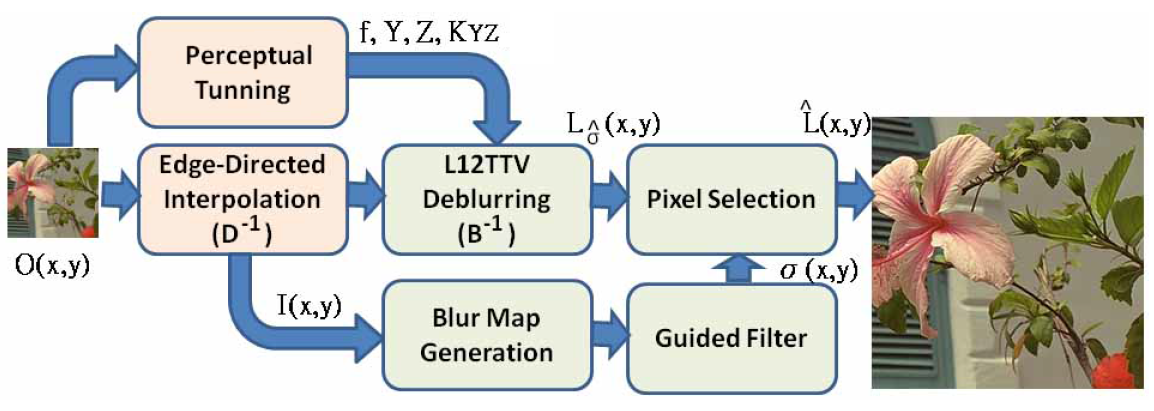

The Proposed System

Proposed super-resolution algorithm consisting of four modules:

edge-directed interpolation, blur map generation, L1L2TTV deblurring with

perceptual-tuning parameters and pixel selection. Different scaling factors lead

to different parameters for perceptual constancy.

Internal Discussion:

[Discussion1]

[Discussion2]

Tentative Matlab Code:

[Spatially-Varying Super-Resolution (Deconvolution)]

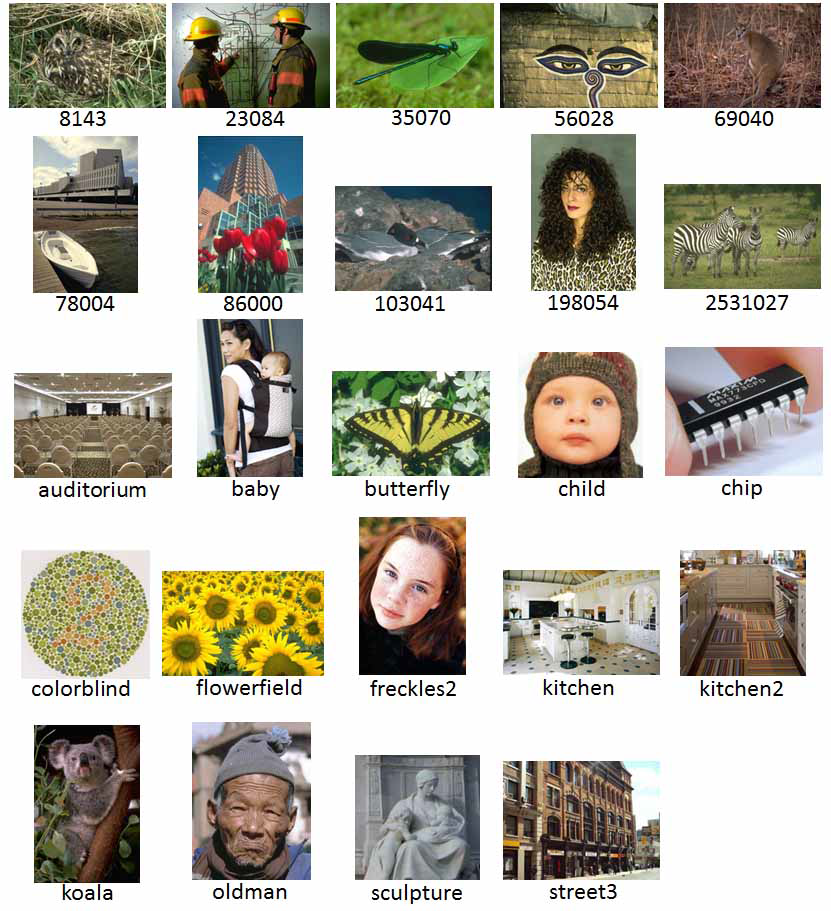

Experimental Results

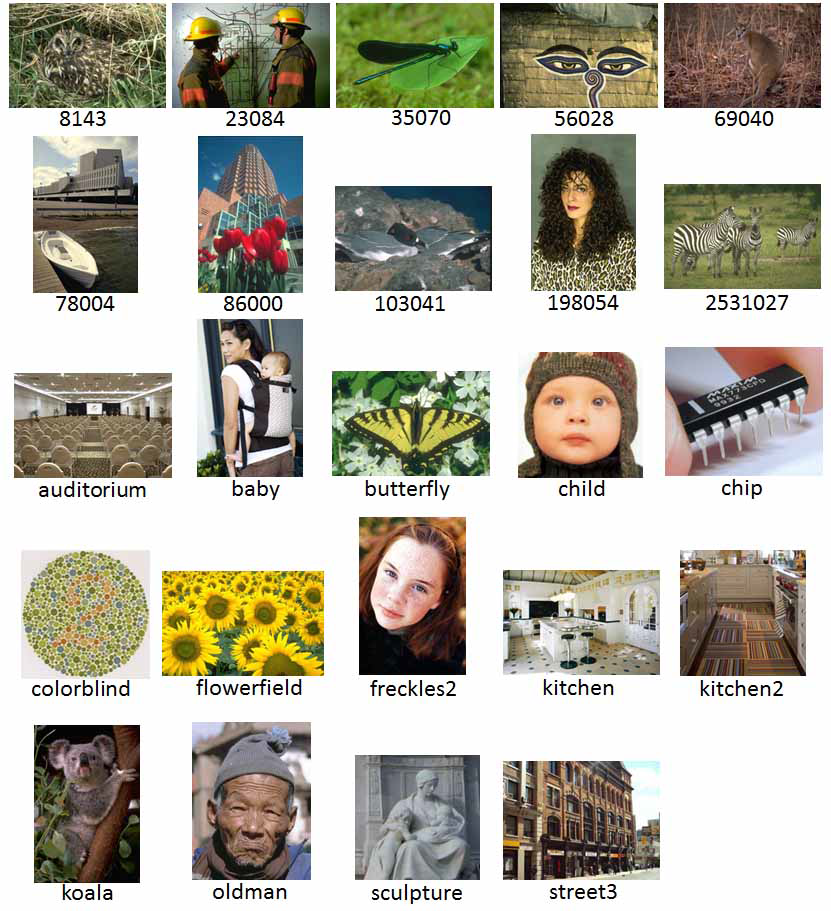

We adopt the images in the dataset, and then we upsample the input

images by a factor of 2, 3 and 4 in both width and length, respectively.

Since the data-set is too huge for the viewers to examine,

we only compare our system with the representative examplar-based method proposed by Yang et al.[23], and

the representative reconstruction-based method proposed by Mallat and Yu [18].

We provide the part of them as our supplementary results:

[Real Image Data]

Parameter Setting

It is not easy to find the setting from 4 parameters.

We first set s = 0.99 to ensure sharp edges.

Then, we compare two parameter setting:

[Parameters]

Subjective Experiments

15 viewers for Setup 1:

We invite 15 viewers to judge our proposed system.

Viewers have to move backward according to the scaling factors.

Each viewer examines the images generated from the aformentioned dataset.

5 is for good perceptual constancy, and 1 is for poor perceptual constancy.

We show the average subjective results across the scaling factors.

| Scaling Factor | Bicubic | Yang et al. | Mallat and Yu | Ours (s=0.8) | Ours (s=0.99) |

| 2 | 2.403 | 3.708 | 2.544 | 3.283 | 3.797 |

| 3 | 2.027 | 3.236 | 2.217 | 2.994 | 3.503 |

| 4 | 1.947 | 2.944 | 2.083 | 2.875 | 3.344 |

15 viewers for Setup 2:

After revision, reviewers suggest to set up another experiment to examine our algorithm.

We set up two high-definition display at different viewing distances to examine our algorithm which is desined to maintain viewers' visual constancy.

We invite 15 viewers to judge our proposed system.

Each viewer also examines the images generated from the aformentioned dataset.

5 is for good perceptual constancy, and 1 is for poor perceptual constancy.

We show the average subjective results across the scaling factors.

| Scaling Factor | Bicubic | Yang et al. | Mallat and Yu | Ours (s=0.8) | Ours (s=0.99) |

| 2 | 2.628 | 3.900 | 2.867 | 3.689 | 3.947 |

| 3 | 2.481 | 3.683 | 2.642 | 3.711 | 4.042 |

| 4 | 2.444 | 2.606 | 2.531 | 3.806 | 4.039 |

BRISQUE Examination across Image Scales:

After revision, we calculate BRISQUE to examine our algorithm.

As we can observe in Table VI that our super-resolution results can give better

natural quality at each scale. It is also reported that enlarging an image may

degrade the natural image quality. However, our proposed super-resolution can still

preserve more natural feature such as sharp edges and rich textures so as to maintain

better natural quality for viewers to see on the HD display.

| Scaling Factor | Bicubic | Yang et al. | Mallat and Yu | Ours (s=0.8) | Ours (s=0.99) |

| 2 | 38.05 | 36.88 | 41.07 | 36.49 | 30.52 |

| 3 | 51.55 | 50.49 | 48.58 | 43.90 | 37.79 |

| 4 | 59.87 | 50.33 | 59.84 | 50.10 | 42.57 |